Interesting Adversarial Defense Methods

Introduction

Today I want to discuss about some of the most interesting adversarial defense methods for me. In short, this post is related to several unique techniques that can be used to defend against adversarial attacks.

For those who still do not know about adversarial attack really is, it is basically a way to fool the model by giving imperceptible change from the perspective of human eyes, but it can significantly change the prediction of our AI model.

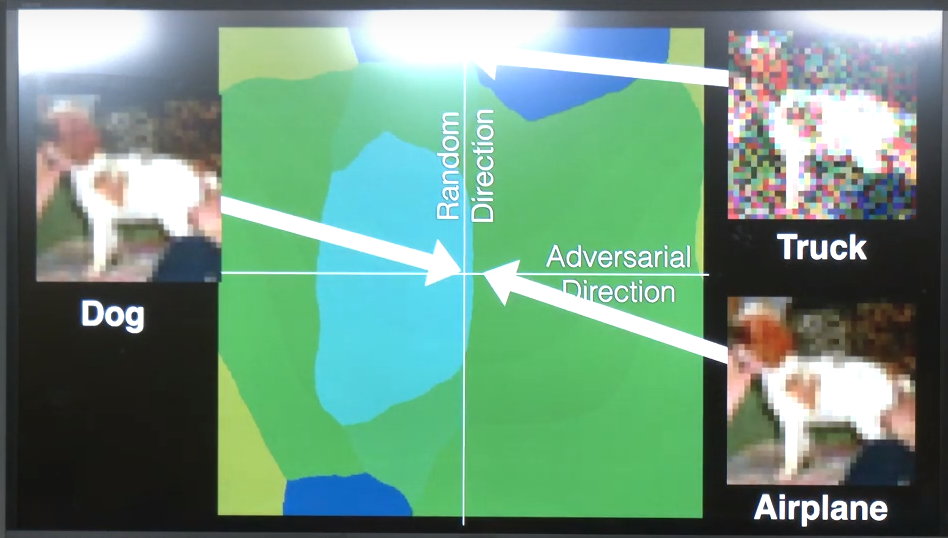

As you can see from the image above, we can find a small change in the adversarial direction that is unseen from human eyes such that the model’s prediction is significantly changed (e.g., from dog to airplane).

The reason for this is curse of dimensionality problem. Since we have a lot of dimensions in the input space, there is almost always exist some areas in the boundary decision that is not perfect as shown in the figure 1. In that image, we basically can move the input image a little bit by giving subtle change into another area that is already classified as airplane.

How can we defend our model against such kind of attack? Let’s discuss some of the most interesting ones!

Defense-GAN

The idea of Defense-GAN is basically to utilize the power of generator representations in GAN to eliminate the adversarial perturbations. This paper assumes that if the input image is changed along the adversarial direction, then the distribution of that image will also be deviated from clean images distributions.

For those who are not familiar with GAN concept, it essentially has two main components. The first one is generator \(G\) that maps the learned latent distribution into reconstructed image. And the second one is discriminator \(D\) that minimizes the difference between the clean (unperturbed) image and the reconstructed one.

Initially, we need to learn the latent distribution based on the clean images in our dataset by mapping the low-dimensional (latent) vector into the high-dimensional image. The generator \(G\) which is neural networks (or we can imagine it as a function) is responsible for doing this mapping.

After that, to ensure that the generated image is indeed similar with the original one, we apply the discriminator \(D\) part which is basically a minimization process of the reconstruction loss.

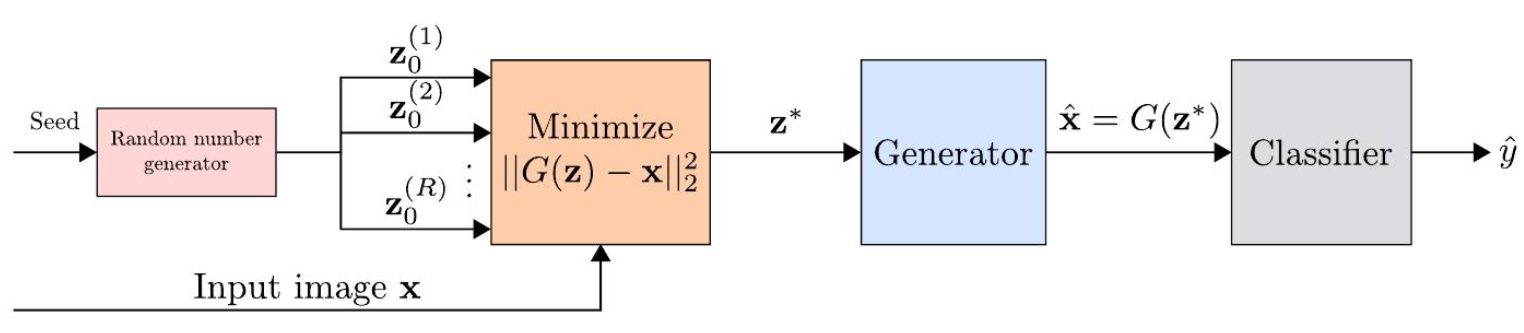

So how Defense-GAN can defend against adversarial attacks? In short, before feeding the input to the model, this algorithm will project the input image onto the range of generators and try to find the best latent vector \(\mathbf{z}^*\) in the learned latent clean distribution such that image generated by \(G(\mathbf{z})\) is as close as possible to the input image \(\mathbf{x}\).

This will effectively avoid the wrong prediction since we give the type of image that is reconstructed from the latent vector located in the clean latent distribution. The process of finding that vector is happened during the inference time.

But, there is an additional challenge. It turns out that the minimization problem faced by this algorithm is highly non-convex which means that there are many possible local minima where our optimization process can stop there.

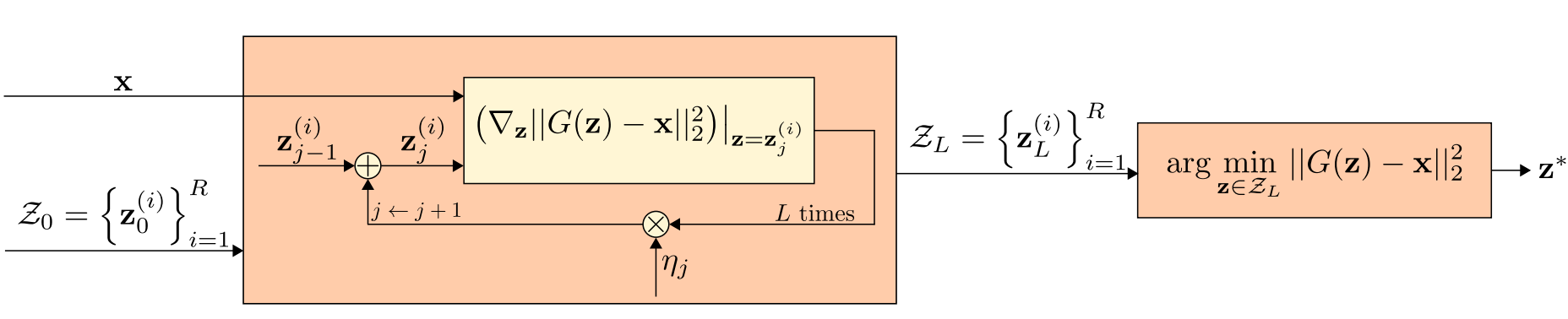

To address this, Defense-GAN uses \(L\)-steps GD, an iterative optimization algorithm, to navigate the latent space. At each step, this method will update the current estimate of \(\mathbf{z}\) to reduce the difference \(\|G(\mathbf{z})-\mathbf{x}\|_{2}^{2}\). The \(L\) steps indicate the number of iterations GD will run to refine the solution.

In addition to that, Defense-GAN also employs multiple random restarts, meaning it initializes \(\mathbf{z}\) at different points in the latent space several times (each one denoted as \(Z_0^{(1)}, Z_0^{(2)}, ..., Z_0^{(R)}\)). For each restart, GD runs for \(L\) steps. This strategy increases the likelihood of finding the global minimum, or at least a very good approximation, rather than getting stuck in a local minimum.

Finally, after \(L\) GD steps, the algorithm selects the best \(\mathbf{z}^*\) that resulted in the closest generated image to \(\mathbf{x}\). The output image \(G(\mathbf{z}^*)\) is considered as cleansed of version of the input image and is passed to the classifier for label prediction.

Input Transformation

Now, let’s discuss another fascinating defense mechanism, Input Transformation by Guo et al [2].

The motivation behind this approach is based on a simple yet profound observation that adversarial attacks can a little bit alter certain statistics of the input image, thus changing the model’s prediction. These perturbations are not random but optimized in a way that they significantly impact the model’s output.

But this is also means that we can manipulate these adversarial alterations in a way that not only negates their effects but also preserves the essence of the original image for accurate classification.

The key intuition of this proposed method is quite straightforward. It is based on the hypothesis that by applying specific transformations to an image, we can disrupt the adversarial perturbations while retaining the critical information necessary for the model to make accurate predictions.

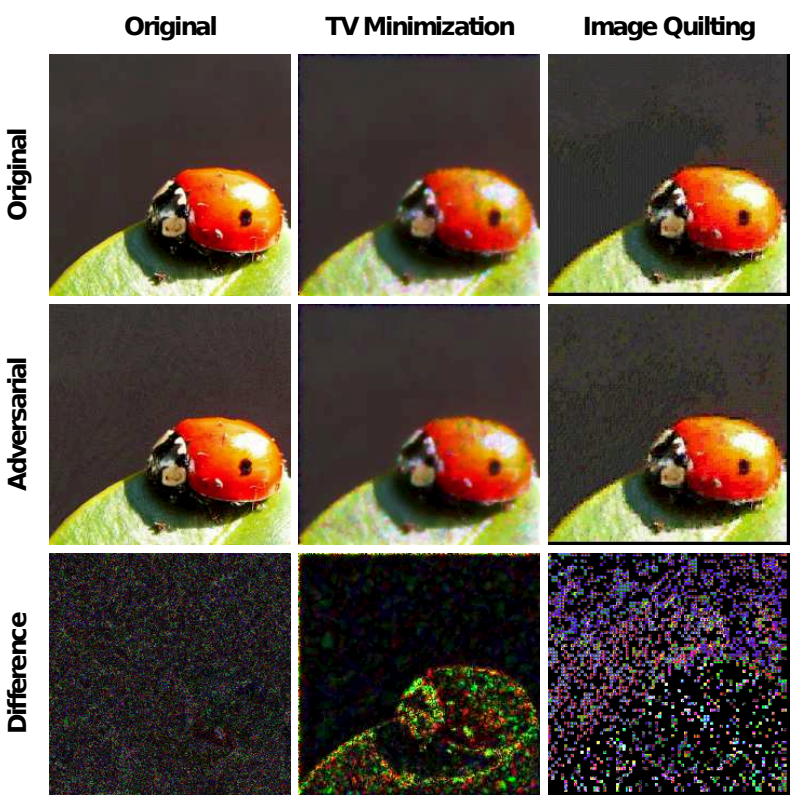

As you can see from the figure above, if we give careful image transformations, such as TV minimization and image quilting, then it can give meaningful differences with the first one seems like the core object itself while another one emphasize more to the background.

The paper [2] proposes a series of image transformations, each with its unique way of combating adversarial effects:

-

Image Cropping and Rescaling: This technique alters the spatial positioning of adversarial perturbations. By cropping and rescaling images during training and averaging predictions over random image crops at test time, it can hinder the effectiveness of adversarial attacks.

-

Bit-Depth Reduction: The idea is to perform a form of quantization, reducing the image to fewer bits, specifically to 3 bits in the experiments. This process helps in removing small adversarial variations in pixel values.

-

JPEG Compression: Similar to bit-depth reduction, JPEG compression removes small perturbations, but now it performs compression at a quality level of 75 out of 100, for maintaining image quality and eliminating adversarial effects.

-

Total Variance Minimization: This approach combines pixel dropout with total variation minimization. It reconstructs the simplest image consistent with a selected set of pixels, essentially filtering out the adversarial noise.

-

Image Quilting: Assumes that any part of the image could be affected by adversarial noise. The algorithm uses a database of clean patches—these are small parts of images that are known to be free from adversarial modifications to match sections of the input image with similar-looking patches from the database.

Each of these transformations contributes to destabilizing the adversarial perturbations, improving the model’s ability to classify images accurately. The beauty of this method lies in its simplicity and the non-invasive nature of the transformations, making it an elegant solution to such a complex problem.

For the other defense methods, stay tune :) I will update more interesting approaches in the future :)

References

- Defense-Gan: Protecting Classifiers Against Adversarial Attacks Using Generative ModelsInternational Conference on Learning Representations (ICLR), 2018

-